On: Apple Intelligence

If I’m being honest, I’m not really concerned about Apple’s ability to make this happen

So it seems that Apple is going to have to delay a lot of different features for Apple Intelligence, specifically around Siri’s ability to work with apps. There’s a large population of people who thinks this is a death knell for Apple, but I honestly don’t really think it is.

Comparing to Other AI Companies

So a lot of people are comparing what Apple’s doing to things like ChatGPT and Operator, and in that regard Apple is way behind. But that’s not what Apple’s building. Not all AI is the same thing—even if it’s attempting to produce the same output. Apple’s approach is still novel here—they’re pushing for on-device intelligence that can take actions within apps across the device by expanding the functionality of the App Intents framework. App Intents exist in iOS now and they’re what developers use to donate functionality to Siri already. This approach would expand what’s possible with App Intents and allow Siri to chain them together. Processing this all on device is difficult and I can see why Apple is having issues with this.

What Could Apple Do

Expand Private Cloud Compute

The first thing Apple could do is just not do this on device and they can focus on expanding the amount of functionality available in Private Cloud Compute. This would be an interesting play, but also not a trivial task. The thing is: Private Cloud Compute isn’t just a server that can run Apple Intelligence, the functionality that the on-device models and the Private Cloud Compute infrastructure aren’t the same—one can’t do the other’s job. Apple would effectively have to rebuild the Foundation model to offload more functionality to Private Cloud Compute, which means more training and more cost.

Open Up App Intents

The other thing Apple could do is open up these App Intent hooks to third party systems. This would allow third parties to start building replacements to Siri. This would also be an interesting play (and I bet it would make the EU pretty happy too) but I think this is just less realistic, at least for now. The models wouldn’t be able to just do things, it would have to be trained on the functionality of App Intents. They’re functions build into the code of the app, they’re not similar to prompts. This is probably the less likely scenario.

Wrap-Up

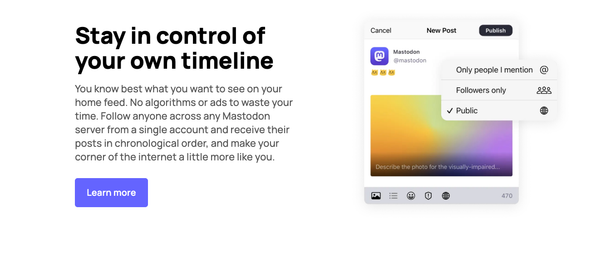

So I really think this comes from a misunderstanding of what Apple’s doing and how they’re doing it. Nobody’s ever accomplished this functionality through this method before and it is ultimately a cheaper, more secure, and more environmentally friendly approach to AI. Even Apple’s server infrastructure for Private Cloud Compute is running on efficient Apple Silicon chips, not power hungry Nvidia cards. I think it’s cool and I think that people are too quick to dismiss their approach. I don’t think they’re out of the game, they still have an interesting idea and I hope they’re able to see it through to the end. Thoughts? You can find me on Bluesky and Mastodon.