Who Is All This Power For?

As Apple’s silicon has evolved over the past few years people are asking “who can use this power” well, here’s who

Apple’s latest powerhouse computer is out: the M3 Ultra Mac Studio and it’s got a lot of people wondering “what the hell is all this power for?”. It’s a valid question, especially in the enthusiast space where most of the powerful workloads include things like gaming, video editing, and (more recently) a lot of coding workflows. The thing is: those aren’t the only workflows out there. I know that there aren’t a lot of people exposed to these other classes of workflows, but they’re out there and they’re honestly super cool.

Modest Gains in Known Workflows

Okay, so we’re getting to the point where a lot of the gains aren’t really being taken advantage of by typical YouTube workloads. Most people who make YouTube videos about technology were originally camera people. They liked technology and they liked to make videos, so they made videos about technology. That means a lot of their experience in these workflows came from that viewpoint. Benchmarking came in the form of video exports and NLE timeline performance. On the other end, there were the gamers who started making videos and showing frame rates and charts and graphs about power consumption, heat output, fan noise, etc.. These became the de facto standards in computing workloads in the YouTube space.

More recently we’ve had a lot of developers enter the scene talking about code compilation, virtualization, performance within IDEs, a different workflow that was differently taxing on the hardware. A lot of code runs on the CPU with only specific tasks being delegated to the GPU, whereas a lot of media is dependent on the GPU. Additionally in the space we started to see musicians with vast libraries of software instruments, VSTs, and talking about DAW performance and how many tracks they could load in. This is an interesting workload because it can tax the GPU and CPU in some unexpected ways. On top of that, a lot of data has to be loaded into RAM so things like the 1.5TB of RAM in the Intel Mac Pro were enticing to audio engineers who needed to load hundreds of software instruments into memory at once (entire orchestras even).

We’re at the point now where computers are honestly pretty good at handling these workflows. My M4 Max MacBook Pro can churn through Xcode, Cursor, Final Cut, Logic, Pixelmator, Docker, Figma, really anything I throw at it like it’s nothing. We’re not seeing a whole lot of gains in these workflows anymore, but that doesn’t mean computers are stagnating, there’s a whole world of computing that is being made available on desktop computers that really used to only be reserved for servers and specialized workstations.

Scientific & Academic Computing

There’s an entire class of workloads that historically aren’t done on personal computers. There are applications like MATLAB, Jupyter, MinKNOW, AutoCAD, NASA TetrUSS, Redshift, Maya, and more. Within a lot of these workflows there were some workloads that could be done on consumer-level hardware, but a lot of this stuff would really have to be reserved for things like specialized workstations, servers, or computing clusters (which are basically mini supercomputers). These are the new kinds of workflows Apple is starting to target with these devices.

How These Workflows Typically Work

So the way most of this goes is that you try and do a lot of your basic computing, planning, simpler calculations, and such on your personal consumer or professional-grade machine. If you were lucky you were given a way more powerful workstation-grade machine to work with, but those are few and far between. When you need more computing power than what’s possible in your own machine, you have to run your computations on a High Performance Computing (HPC) cluster. This means that you have to move your code over to the server cluster, make sure it’s all ready to go, then wait for the cluster to get around to your calculation, then get the response. It’s slow, this can take days or weeks to calculate depending on the complexity, load, and scale of your cluster.

What these new Macs are aiming for is to cut down on how much of that has to be done on specialized systems. Now you don’t have to wait days to reserve time on the cluster, you can take a couple of hours to crunch the numbers on your own machine. On top of that, the bigger computations that would take over a week due to scheduling now aren’t going to because the smaller computations can be done client-side. Overall this speeds up so much more than just one individual’s research, it can save entire departments days or weeks in multiple research projects.

Wrap Up

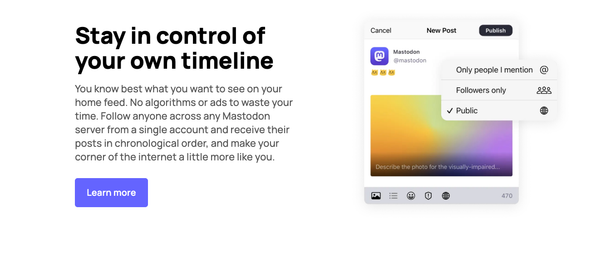

Remember: just because you can’t think of a workload doesn’t mean it doesn’t exist. As someone who built and maintained clusters like this, they’re not common workloads people would know about outside of the academic world. In addition, not every jump in performance is about making current workflows faster—often it’s about unlocking new ones. You can really see Apple going after these new workflows in their keynotes, featuring a lot more lab work than previously seen before. It’s a whole new world of computing that is now possible on Macs and, if you know where to look, it’s actually really fucking cool. Thoughts? Reach out on Bluesky or Mastodon.